The Ultimate Guide on Chunking Strategies – RAG (part 3)

The Ultimate Guide on Chunking Strategies – RAG (part 3)

Overview

Chunking in Large Language Model (LLM) applications breaks down extensive texts into smaller, manageable segments. This technique is crucial for optimizing content relevance when embedding content in a vector database using LLMs. This guide will explore the nuances of effective chunking strategies. This is part 3 of the RAG series and check part-1 and part-2 to understand the overall RAG pipeline effectively.

Why Chunking is Necessary

- LLMs have a limited context window, making it unrealistic to provide all data simultaneously.

- Chunking ensures that only relevant context is sent to the LLM, enhancing the efficiency and relevance of the responses generated.

Considerations Before Chunking

Document Structure and Length

- Long documents like books or extensive articles require larger chunk sizes to maintain sufficient context.

- Shorter documents such as chat sessions or social media posts benefit from smaller chunk sizes, often limited to a single sentence.

Embedding Model

The chunk size selected often dictates the type of embedding model used. For instance, sentence transformers are well-suited to sentence-sized chunks, whereas models like OpenAI’s “text-embedding-ada-002” may be optimized for different sizes.

Expected Queries

- Shorter queries typically require smaller chunks for factual responses.

- More in-depth questions may necessitate larger chunks to provide comprehensive context.

Chunk Size Considerations

- Small chunk sizes, like single sentences, offer accurate retrieval for granular queries but may lack sufficient context for effective generation.

- Larger chunk sizes, such as full pages or paragraphs, provide more context but may reduce the effectiveness of granular retrieval.

- An excessive amount of information can decrease the effectiveness of generation, as more context does not always equate to better outcomes.

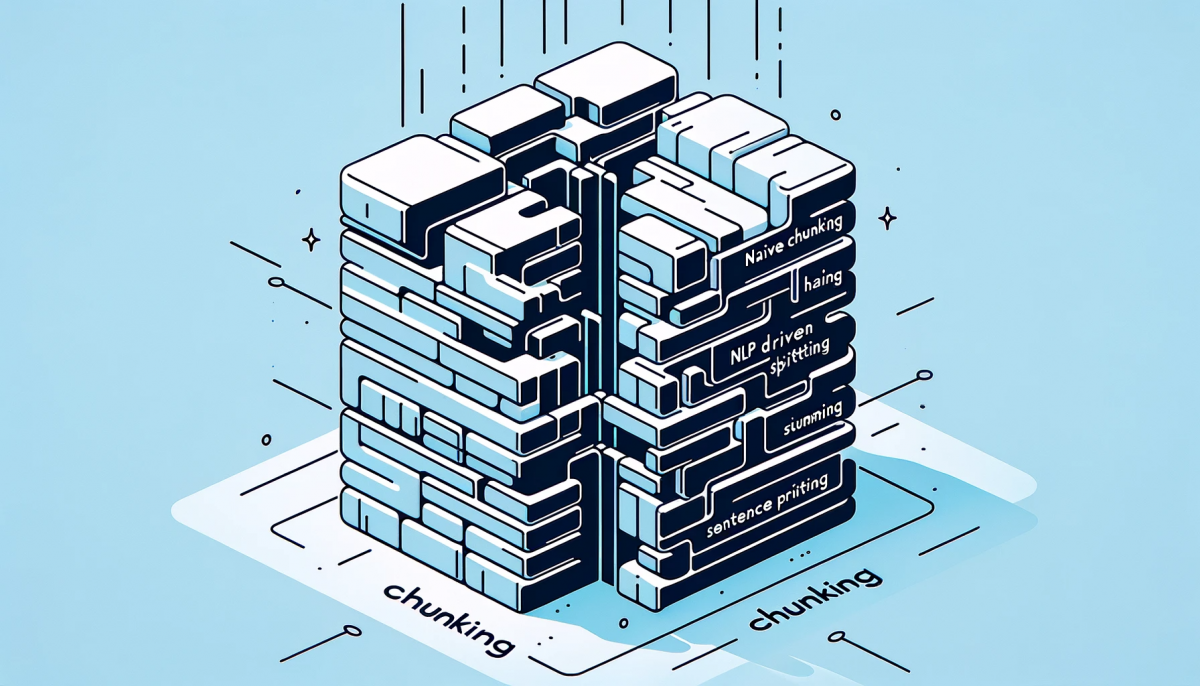

Chunking Methods

Naive Chunking

- Involves chunking based on a set number of characters.

- Fast and efficient but may not account for the structure of the data, such as headers or sections.

Naive Sentence Chunking

- Splits text based on periods.

- Not always effective, as periods may appear within sentences and not necessarily at the end.

NLP Driven Sentence Splitting

Utilizes natural language processing tools like NLTK or Spacy to chunk sentences more effectively, considering linguistic structures.

Recursive Character Text Splitter

Recursively splits text into chunks based on set sizes and text structure, keeping paragraphs and sentences intact as much as possible.

Structural Chunkers

- Splits HTML and markdown files based on headers and sections.

- Chunks are tagged with metadata specifying their headers and sub-sections, aiding in content organization.

Summarization Chains

- Involves summarizing each document and sending these summarizations into the context.

- For long summaries, methods like ‘Map reduce’ are used, where the document is chunked, and each chunk is summarized separately before combining all summaries into one.

- The ‘refine’ method is another approach where the overall summary is iteratively updated based on each chunk.

Chunking Decoupling (Small to Big)

- Summary chunks are tagged with the original file link in their metadata.

- When a summary is retrieved, the corresponding full document can be injected into the context instead of just the summary.

- This method can also be applied to sentence chunks, allowing for expansion to relevant snippets or the entire document based on the context length and document size.

Conclusion

This article marks another step in our journey through the RAG pipeline using Large Language Models. As we wrap up, stay tuned for Part 4 of our series, which will focus on the Retriever – the heart of the RAG system. This upcoming piece will offer an in-depth look at the pivotal component that enhances the pipeline’s efficiency and accuracy, further illuminating the intricate workings of these advanced models.