Best Prompt Engineering Techniques Part 3

Best Prompt Engineering Techniques Part 3

We explored the most widely used prompt engineering techniques in the first and second part of this series. As prompt engineers continue to experiment and innovate, we’ve identified additional effective and popular techniques emerging from the prompt engineering community. In this third part, we will cover the following:

- Active Prompt

- Tree of Thoughts Prompting

- Multimodal CoT

- Reflexion

- Directional Stimulus Prompting

Active Prompt

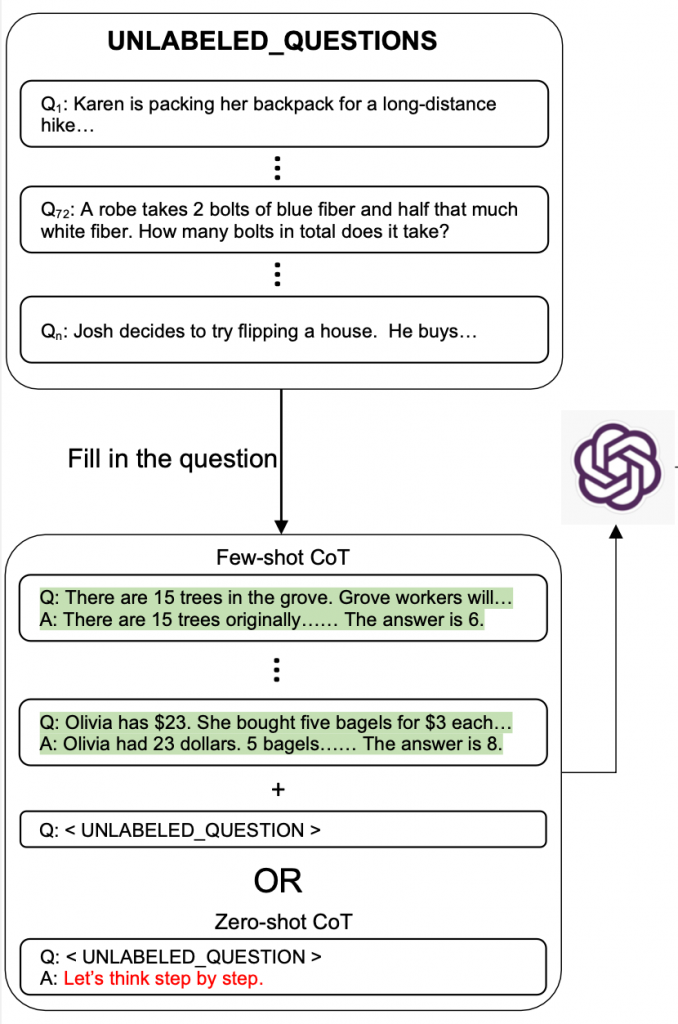

Active Prompting is a refined approach to implementing Chain of Thought (CoT) prompting. A key issue with CoT prompting is that the examples provided are dependent on human input, which might not always be optimal. The question then becomes: how do we ensure the examples used in CoT are effective enough to yield accurate answers? To address this, Diao et al., (2023) introduced the ‘active prompting’ technique.

Steps Involved:

- Create a List of Training Questions:

- Examples:

- Karen is packing her backpack for a long-distance hike. She has 10 items to fit in, but only room for 7. How many items will she leave behind?

- A robe takes 2 bolts of blue fiber and half that much white fiber. How many bolts in total does it take?

- Josh decides to try flipping a house. He buys a house for $200,000 and spends $50,000 on renovations. If he sells the house for $300,000, what is his profit?

- Liam has a garden with 12 different types of flowers. He decides to plant an equal number of each type in 3 different rows. How many flowers will be in each row?

- Mia is organizing a bookshelf that can hold 60 books. She already has 35 books and buys 20 more. How many more books can she add to the shelf?

- Sam is preparing for a marathon. He runs 5 miles a day for 6 days a week. How many miles will he run in 4 weeks?

- A bakery sells cupcakes in boxes of 8. If a customer buys 5 boxes, how many cupcakes do they have?

- Examples:

- Use Normal CoT Prompting:

- Select a few questions and use CoT prompting to generate answers for each question, excluding the chosen input.

- Repeat k Times and Calculate Uncertainty Scores:

- For instance, repeat the process five times for each question to obtain k answers.

- Determine the uncertainty score based on the k answers (5 answers in this example).

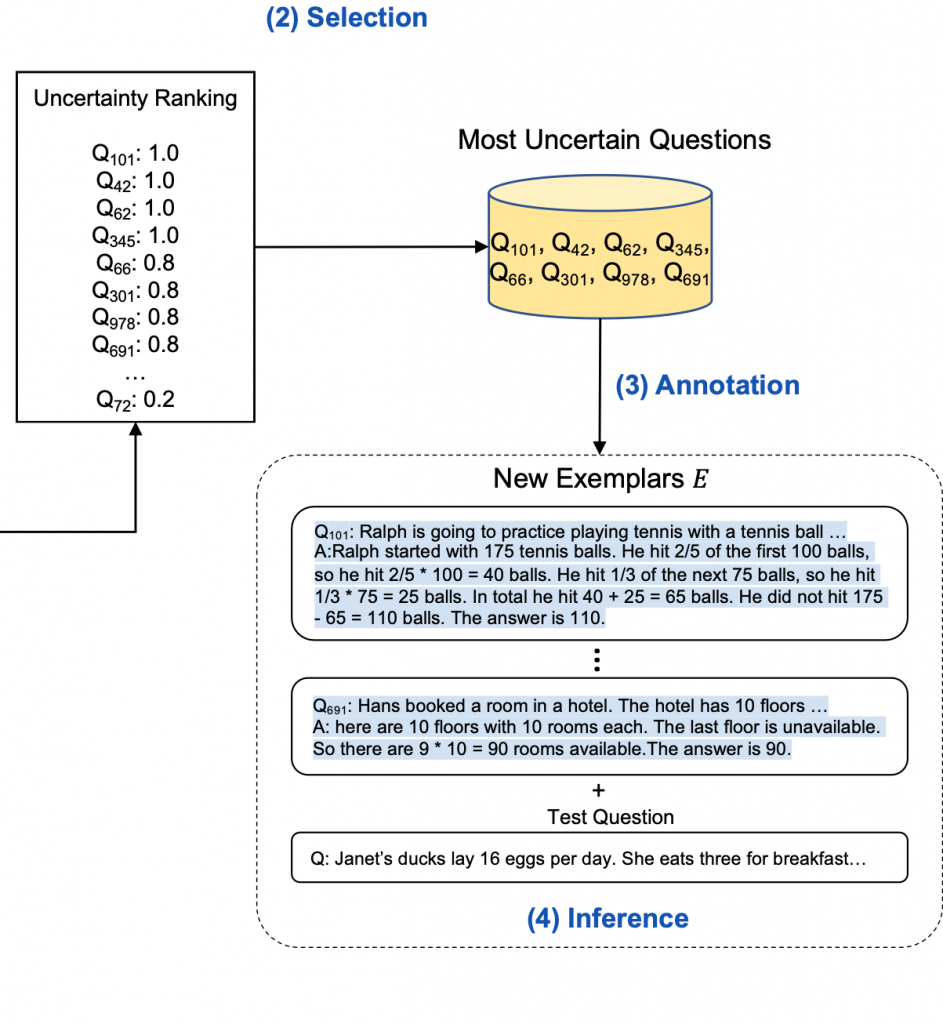

- Sort Questions:

- Arrange all questions in descending order based on their uncertainty scores.

- Annotate Top Questions:

- Choose the top 2 or 3 questions and have humans annotate them, providing the actual steps for these questions.

- Use Annotated Pairs:

- Input these human-annotated question-and-answer pairs into your CoT prompting to generate answers.

Tree of Thoughts Prompting

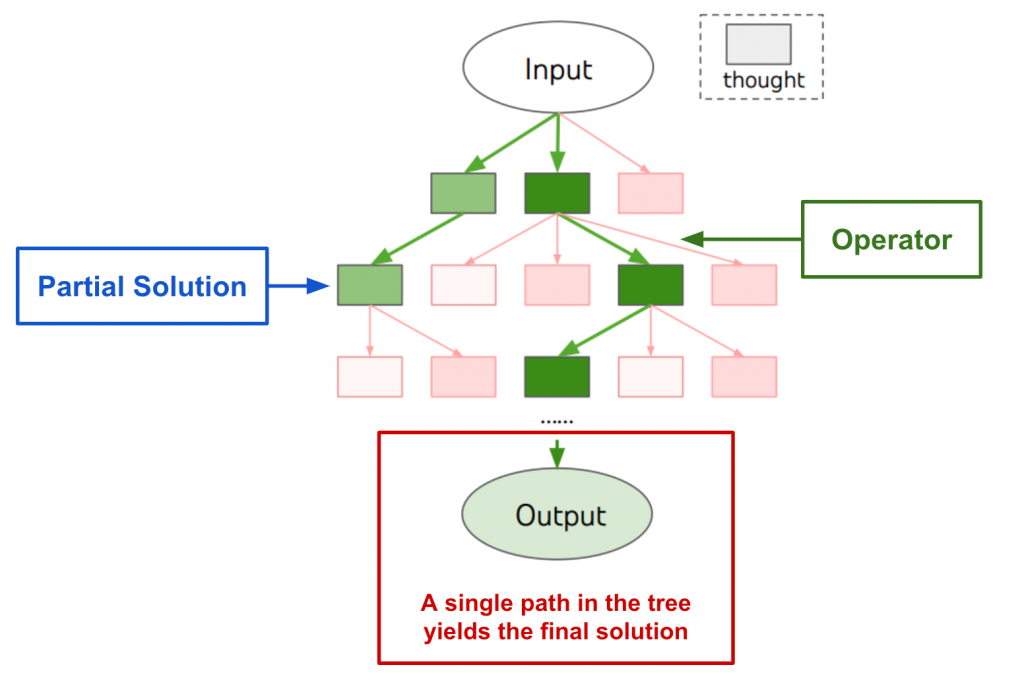

Tree of Thoughts (ToT) Prompting, introduced by Shunyu Yao et al. in 2023, provides a structured roadmap for guiding AI models to generate comprehensive responses.

Visualizing the ‘Tree of Thoughts’ Concept:

Think of a tree’s structure: a solid trunk represents the main topic, and as it branches out, each branch represents a more specific aspect or query related to the main topic.

Components:

- The Core Foundation (Trunk):

- This initial prompt sets the foundation and provides direction and context for subsequent prompts.

- Branching Out (Subtopics and Related Ideas):

- These are follow-up questions or sub-topics stemming from the primary prompt, adding depth and clarity.

Example:

Dave Hulbert’s Repo 2023 demonstrated using ToT to solve a riddle. ChatGPT 3.5 initially struggled but performed better with a ToT format.

Game Example:

To illustrate the ToT technique, consider the game of 24 puzzle. The goal is to calculate the number 24 using the input set “4 5 6 10”. Here’s a solution:

- Step 1:

- Generate — The model generates eight possible proposals for the first step, e.g., 10 – 4 = 6 (remaining: 5, 6, 6).

- Evaluate — The model evaluates each proposal three times and sums the scores.

- Select — The model selects the best five proposals based on their evaluation scores.

- Step 2:

- Generate — For each of the five nodes, the model generates eight possible proposals for the second step, e.g., 5 * 6 = 30 (remaining: 6, 30).

- Evaluate — The model evaluates each proposal.

- Select — The model selects the best five proposals to form the second layer of the tree.

- Step 3:

- Generate — For each selected node, the model generates eight possible proposals for the third step, e.g., 30 – 6 = 24 (remaining: 24).

- Evaluate — The model evaluates each proposal.

- Select — The model selects the best proposals for the next layer.

- Step 4:

- Generate — For each proposed solution that correctly solves the puzzle, a formal answer is generated in the desired format.

Thus, the answer is (5×(10−4))−6=24(5 \times (10 – 4)) – 6 = 24(5×(10−4))−6=24.

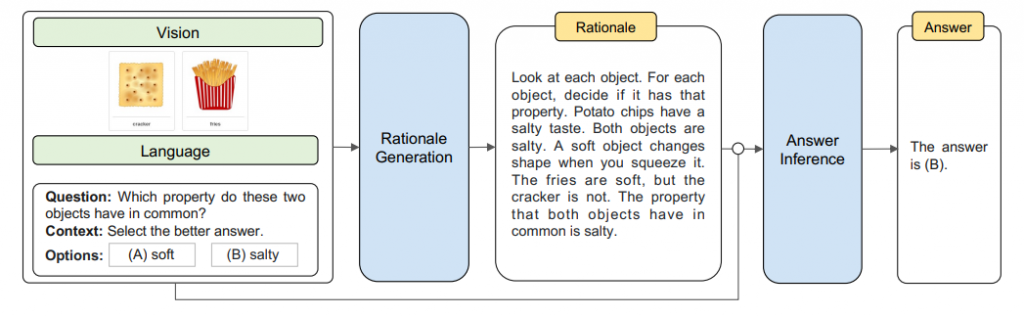

Multimodal CoT

Multimodal CoT enhances reasoning in Large Language Models (LLMs) by incorporating both text and images.

Two-Stage Framework:

- Rationale Generation:

- Combines language and vision inputs to generate intermediate reasoning steps.

- Answer Inference:

- Uses the rationale from the first stage along with the original vision input to infer the final answer.

Reflexion

Reflexion mirrors human intelligence by allowing AI to learn from its mistakes. This iterative problem-solving method is particularly useful in fields without a definitive ground truth.

Technique:

In GPT-4, Reflexion involves analyzing mistakes, learning from them, and improving performance through a self-contained loop. This approach enhances the AI’s ability to solve complex tasks by using feedback to refine strategies continuously.

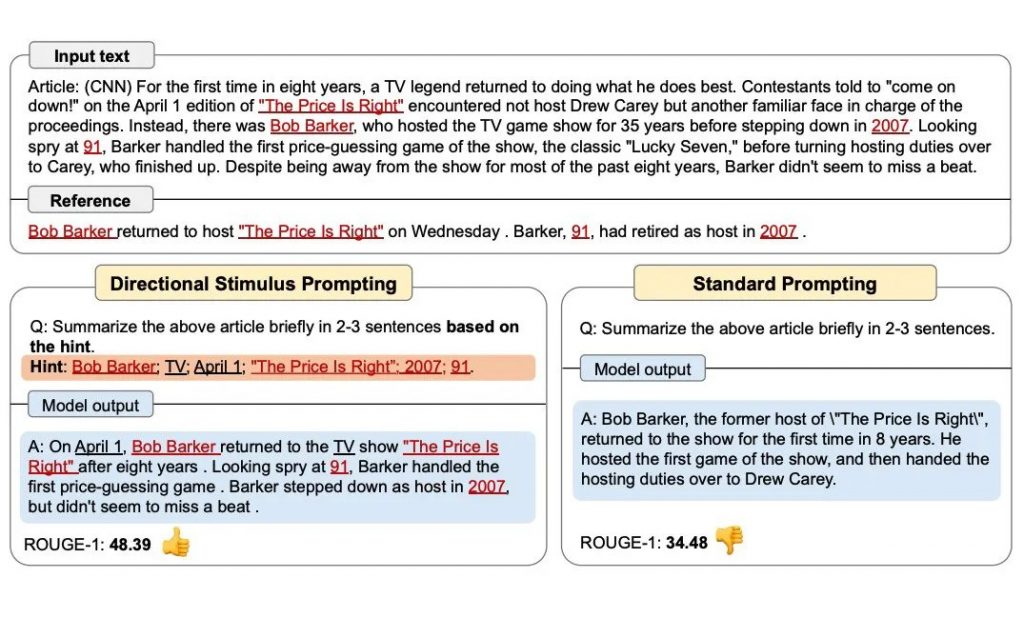

Directional Stimulus Prompting

This technique involves adding a small hint or help in the initial prompt to guide the model towards the desired output. By steering the model’s thinking in a specific direction, it better understands and delivers the expected results.

Conclusion

In this part of our series, we’ve explored advanced prompt engineering techniques that can significantly enhance the performance of AI models. Techniques like Active Prompt, Tree of Thoughts Prompting, Multimodal CoT, Reflexion, and Directional Stimulus Prompting represent the cutting edge of prompt engineering. As the field of prompt engineering continues to evolve, these innovative approaches will push the boundaries of what AI can achieve, driving better outcomes in various applications.

By understanding and applying these techniques, prompt engineers can create more effective and accurate AI models, ultimately improving the capabilities of AI systems. Embracing these advanced prompt engineering techniques will ensure that AI continues to grow smarter and more efficient, meeting the ever-increasing demands of users and applications.